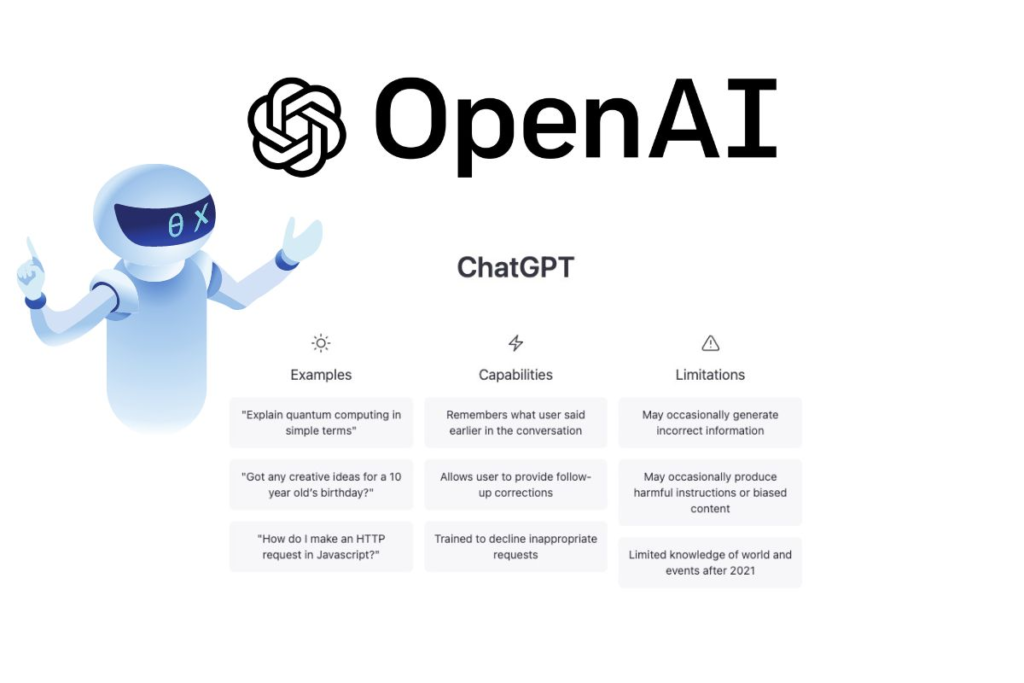

The field of language models has undergone tremendous growth and development over the past few years. From early models that were limited in their capabilities, to state-of-the-art models like ChatGPT and ChatGPT 4 that can generate human-like text and perform a wide range of tasks, the progress has been remarkable. However, the development of language models is not slowing down anytime soon. In this blog post, we will explore what developments we can expect to see in the field of language models over the next few years, and how these developments might impact the capabilities of models like ChatGPT and ChatGPT 4.

The Rise of Multimodal Language Models

One trend that we can expect to see in the development of language models is the rise of multimodal models. Multimodal models are models that can process and analyze multiple modes of input, such as text, images, and audio. These models are becoming increasingly important as we interact with more and more multimodal data in our daily lives, such as videos, social media posts, and voice assistants.

Recently, OpenAI released DALL-E and CLIP, two models that are capable of generating images from textual descriptions and performing image recognition tasks respectively. These models are examples of multimodal models that combine language processing with visual processing to achieve their capabilities. It is likely that we will see more multimodal models in the future that combine different modalities to achieve even more advanced capabilities.

Improved Efficiency and Speed

One major limitation of current language models like ChatGPT and ChatGPT 4 is their high computational cost and long inference times. This limits their usability in real-time applications and makes them inaccessible to users without access to powerful hardware. However, there are ongoing efforts to improve the efficiency and speed of these models.

One approach is model compression, which involves reducing the size and complexity of a model while preserving its performance. This can be achieved through techniques like pruning, quantization, and distillation. Another approach is the development of specialized hardware like Google’s Tensor Processing Units (TPUs) and Nvidia’s Tensor Cores, which are designed specifically for deep learning and can greatly accelerate the computation of neural networks.

Privacy and Security

As language models become more advanced and more widely used, concerns about privacy and security are likely to grow. Language models like ChatGPT and ChatGPT 4 are trained on massive amounts of data, including user-generated text data, which raises questions about who owns this data and how it is used. Additionally, there is the risk of malicious actors using language models to generate convincing fake text or to extract sensitive information from text data.

To address these concerns, there will be a need for increased transparency and accountability in the development and deployment of language models. This includes ensuring that users are aware of how their data is being used and giving them control over their data. It also involves developing techniques to detect and mitigate the risks of malicious use of language models.

New Applications and Use Cases

As language models continue to improve and become more versatile, we can expect to see new applications and use cases emerge. For example, language models could be used to improve healthcare by analyzing medical records and helping doctors make better diagnoses. They could also be used to automate tasks like customer service and translation, freeing up human resources for more complex tasks.

Language models could also be used to improve education by providing personalized learning experiences for students. For example, a language model could analyze a student’s writing and provide feedback on areas for improvement. It could also generate quizzes and other learning materials based on the student’s individual needs and learning style.

Conclusion

The future of language models is bright, with many exciting developments on the horizon. We can expect to see the rise of multimodal models, improved efficiency and speed, increased focus on privacy and security, and another promising development in the field of language models is the emergence of zero-shot and few-shot learning. Traditionally, language models have required large amounts of labeled data to achieve high performance. However, with zero-shot and few-shot learning, models can learn to perform new tasks with only a small number of examples.

Zero-shot learning involves training a model on one set of tasks and then using it to perform a different, unseen task. For example, a language model trained on a news corpus could be used to generate text in response to a prompt about a scientific topic, without any additional training.

Few-shot learning, on the other hand, involves training a model with a small number of examples of a new task. This is particularly useful in situations where labeled data is scarce or expensive to obtain. For example, a language model could be trained to summarize legal documents with only a few examples of summarization.

The emergence of zero-shot and few-shot learning has the potential to make language models more adaptable and flexible in their capabilities. This could lead to a wide range of new applications, from personalized language models that can learn from a user’s individual writing style to models that can quickly adapt to new domains or languages.

In addition to these developments in technology itself, there are also important social and ethical considerations to take into account as language models become more advanced and widely used. One major concern is the potential for these models to perpetuate and even amplify biases present in the data they are trained on.

Language models are only as unbiased as the data they are trained on, and if that data reflects existing societal biases and discrimination, the model will learn and perpetuate those biases. This can have serious real-world consequences, such as reinforcing stereotypes or unfairly disadvantaging certain groups.

To address these concerns, it is important for researchers and developers to prioritize the use of diverse and representative data in training language models. This includes considering the sources of the data and ensuring that it reflects a variety of perspectives and experiences.

Another important consideration is the need for transparency and accountability in the development and deployment of language models. Users and stakeholders should have access to information about how these models are trained, what data is used, and how they make decisions. Additionally, there needs to be a clear framework for evaluating the performance and potential biases of these models.

As language models continue to evolve and become more sophisticated, there is no doubt that they will play an increasingly important role in our lives. From improving communication and productivity to advancing scientific research, the potential applications of these models are vast and varied.

However, as we move forward, it is important to carefully consider the potential social and ethical implications of these technologies. By prioritizing transparency, accountability, and the use of diverse and representative data, we can work to ensure that these models are used to benefit society as a whole.